Meredith and TQ's stories: Too Late for the Pebbles to Vote

A bad actor who’s clever about repeatedly pushing ever closer to that line, or who crosses it but takes care not to leave evidence that would convince a jury beyond a reasonable doubt, is one who knows exactly what s/he’s doing and is gaming the system.

Published August 2016 by Meredith L. Patterson on Status451.com

There’s a pattern most observers of human interaction have noticed, common enough to have earned its own aphorism: “nice guys finish last.” Or, refactored, “bad actors are unusually good at winning.” The phenomenon shows up in business, in politics, in war, in activism, in religion, in parenting, in nearly every collaborative form of human undertaking. If some cooperative effort generates a valuable resource, tangible or intangible, some people will try to subvert the effort in order to divert more of that resource to themselves. Money, admiration, votes, information, regulatory capacity, credibility, influence, authority: all of these and more are vulnerable to capture.

Social engineering, as a field, thus far has focused primarily on hit-and-run tactics: get in, get information (and/or leave a device behind), get out. Adversaries who adaptively capture value from the organizations with which they involve themselves are subtler and more complex. Noticing them, and responding effectively, requires a different set of skills than realizing that’s not the IT guy on the phone or that a particular email is a phish. Most importantly, it requires learning to identify patterns of behavior over time.

Having recently been adjacent to the sudden publicity of one such pattern of behavior, I have a lot to discuss about the general mechanisms that give rise to both these patterns and the criticality events — the social media jargon is “shitstorms” — they occasionally generate, and also about this specific incident. We’re going to talk about narcissism and its side effects, and how bad actors can damage good organizations. We’re going to talk about how bad things happen to good people, how all kinds of people make bad decisions, and also how organizations live and die. We’re going to talk about self-organized criticality. There will be game theory, and management theory, and inside baseball, and multiple levels from which to view things, and even a diagram or two, so if diagrams aren’t your thing, you might as well bail out now. There will also be some practical advice, toward the end.

But first, let’s talk about David Chapman’s 2015 essay, “Geeks, MOPs, and sociopaths in subculture evolution.”

In Chapman’s analysis, a subculture’s growth passes through three phases. First come the geeks, the creators and their True Fans whose interest in a niche topic gets a scene moving. Then come the MOPs, short for “Members Of Public,” looking for entertainment, new experiences, and something cool to be part of. Finally, along come the sociopaths, net extractors of value whose long-term aim is to siphon cultural, social, and liquid capital from the social graph of geeks and MOPs. Sociopaths don’t just take, unless they’re not very good at what they do. Many sociopaths contribute just enough to gain a reputation for being prosocial, and keep their more predatory tendencies hidden until they’ve achieved enough social centrality to be difficult to kick out. It’s a survival strategy with a long pedigree; viruses that burn through their host species reservoir too quickly die off.

Corporations, of course, have their own subcultures, and it’s easy to see this pattern in the origin stories of Silicon Valley success stories like Google — and also those of every failed startup that goes under because somebody embezzled and got away with it. Ditto for nonprofits, activist movements, social networking platforms, and really anything that’s focused on growth. Which is a lot of things, these days.

Organizations have a strong incentive to remove net extractors of value. Would-be net extractors of value, then, have an even stronger incentive to keep themselves connected to the social graph. The plasticity of the human brain being what it is, this sometimes leads to some interesting cognitive innovations.

Narcissism, for example, when it rises to the level of a pathology, is a personality disorder. This is not sufficient, in and of itself, to qualify someone as a sociopath in Chapman’s model. A narcissist who knows what kind of behavior s/he is capable of, keeps capital-siphoning behaviors (like claiming credit for others’ work) in check, and remains a net contributor of value even when that contribution isn’t aligned with his/her personal incentives, is by definition not a sociopath. However, a large social graph can be a tempting source of narcissistic supply, the interpersonal support that feeds a narcissist’s fragile and hungry ego. A narcissist who coerces or cons others into maintaining the “superman” narrative that papers over that damaged ego is a narcissistic sociopath. Other personality disorders can develop in similar ways, such as with borderline sociopaths, who coerce or con others into holding up the black-and-white, good-versus-evil lens through which the borderline sees the world. A mere personal dysfunction, once weaponized, becomes something much larger and more dangerous.

If you’ve ever seen an apparently-thriving group suddenly implode, its members divided over their opinions about one particular person, chances are you’ve seen the end of a sociopath’s run. Last December, progressive PR firm FitzGibbon PR collapsed when it came out that founder Trevor FitzGibbon had a pattern of sexually assaulting and harassing his employees and even some of his firm’s clients. However, the progressivism is what elevates the FitzGibbon story to “man bites dog” levels of notoriety. Everyone loves to watch a hypocrite twist in the wind. Usually one hears about sociopath-driven organizational meltdowns through the grapevine, though, not the media. Fearing repercussions or bad publicity, firms often equivocate about the reasons behind a sudden departure or reorganization. This tendency is understandable from a self-preservation perspective, but it also covers a sociopath’s tracks. Ejected from one firm, a serial net extractor of value can pick right back up at another one. (Indeed, FitzGibbon had been disciplined for harassment at his previous firm.)

Which brings us to the Tor Project.

Tor is an anonymous routing network. Journalists, dissidents, law enforcement, queer people, drug dealers, abuse victims, and many other kinds of people who need privacy send and receive their Internet traffic through Tor’s encryption and routing scheme in order to keep site operators from knowing who and where they are. It’s an intricate system with a lot of moving parts, supported by a foundation that pays its developers through the grant funding it brings in. And about two months ago, Tor’s most visible employee, Jacob “ioerror” Appelbaum, abruptly resigned.

Before coming to Tor, Appelbaum already had a history of value-extracting behavior only occasionally noticeable enough to merit discipline. His 2008 Chaos Communication Congress talk presented, without credit, research that he had wheedled out of Len Sassaman, Dan Kaminsky, and me the previous year. Other researchers, like Travis Goodspeed and Joe Grand, learned the hard way that to work “with” Appelbaum meant to have him put in no effort, but take credit for theirs. As Violet Blue points out, his ragequit from San Francisco porn producer Kink.com followed a flotilla of employee rulebook updates he’d personally inspired.

There’s never a convenient time for a scandal involving a decade-plus of sexual and professional misconduct, and organizational cover-ups thereof, to break. It’s easy to think “oh, I have a lot on my plate right now,” or “oh, it’s not really my problem,” and keep your head down until the chaos subsides. I could have exercised either of those options, or any one of half a dozen others, when Tor announced Appelbaum’s resignation in a one-sentence blog post a week and change before my wedding. But there’s never a good time for a pattern of narcissistic sociopathy to be exposed; there is only too late, or even later. So I got vocal.

https://t.co/A47YgBKXQl is a gross disservice to the Tor community. People deserve to know why Tor evicted its resident sociopath.

— Meredith L. Patterson 💉💉 (@maradydd) June 3, 2016

Tor had the chance to nip this in the bud back when Jake was just a plagiarist. They ignored it, and he graduated to sexual assault.

— Meredith L. Patterson 💉💉 (@maradydd) June 3, 2016

.@mmeijeri Jake finally raped enough people that Tor as an organisation couldn't ignore it anymore.

— Meredith L. Patterson 💉💉 (@maradydd) June 3, 2016

So did some other folks.

And Tor confirmed that Appelbaum had resigned over sexual misconduct. Nick Farr went public about how Appelbaum had stalked and intimidated him at a conference in Hamburg in December 2013. Appelbaum vowed he’d done nothing criminal and threatened legal action, and the media circus was on.

It turns out that when seven pseudonymous people, and a small handful of named ones, speak up in a situation like this one, reporters really, really want to talk to the people with real names attached. At the time, I was in Houston, taking care of final preparations for my June 11th wedding on Orcas Island. I also spent a lot of that time fielding journalists’ questions about things I’d learned from members of the community about Tor’s little “open secret,” about Appelbaum’s plagiarism, and about observing Appelbaum manhandling a woman in a bar from my vantage point about twenty feet away. Then I got on a plane, flew to Seattle, got on a ferry, and didn’t open my laptop until I returned to work the following Monday.

During that period, Appelbaum’s publicist apparently tracked down and released a statement from the woman involved in the bar incident, Jill Bähring.

Bähring avers that her interactions with Appelbaum were entirely consensual, which I am relieved and pleased to hear. I’m not sure why anyone would expect any other reaction out of me, seeing as how I’ve sung the praises of making mistakes and owning them in public for so long that I’ve given invited talks on it. The interaction I observed took place within my line of sight but out of my earshot, and if I misinterpreted it, then I genuinely am sorry about that. Ultimately, Bähring makes her own decisions about what she consents to or doesn’t. If I was mistaken, well, good.

But Leigh Honeywell also makes her own decisions about what she consents to or doesn’t, and Karen Reilly likewise. Attacking me over a misinterpretation may be enough to distract some people from the full scope of a situation, but nothing about my error invalidates Honeywell and Reilly’s accounts about their own experiences. Isn’t it interesting that someone whose first public response to allegations of wrongdoing was “I apologize to people I’ve hurt or wronged!” hasn’t had a single word to say to either one in two months? Or to Alison Macrina, or to Isis Lovecruft? It’s as if the allegorical defendant against murder, arson, and jaywalking had no response to the murder or arson counts, but wanted to make damned sure the whole world knew he wasn’t a jaywalker.

*slow clap*

How low-rent of a publicist do you have to hire for them not to be able to keep a story that simple consistent? All Appelbaum had to do was swallow his pride and ask his publicist to bang out an apology of the “I’m sorry you feel that way” variety, and he could have maintained the semblance of high ground he tried to stake out in his initial statement. But the need to be adored — the narcissist’s defining quality, and the sociopath’s first rule of survival — is simply too alluring, the opportunity to gloat over seeing one’s prey stumble too difficult to resist.

Attention is a scarce commodity. What a person expends it on reveals information about that person’s priorities.

Isn’t it interesting when people show you what their preferences really are?

But that’s more than enough narcissistic supply for that particular attention junkie. Let’s talk about preference falsification spirals.

Honeywell correctly observes that whisper networks do not transmit information reliably. In her follow-on post, she advocates that communities “encourage and support private affinity groups for marginalized groups.” If this worked, it would be great, but Honeywell conveniently neglects to mention that this solution has its own critical failure mode: what happens to members of marginalized groups whom the existing affinity group considers unpersons? I can tell you, since it happened here: we had to organize on our own. Honeywell’s report came as a surprise to both me and Tor developer Andrea Shepard, because we weren’t part of that whisper network. Nor would we expect to be, given how Honeywell threw Andrea under the bus when Andrea tried to reach out to her for support in the past. If your affinity group refuses to warn or help Certain People who should otherwise definitionally fall under its auspices, then what you’re really saying is “make sure the sociopath rapes an unperson.”

Thanks, but no thanks. Nobody should have to suck up to an incumbent clique in order to learn where the missing stairs are. The truly marginalized are those with no affinity group, no sangha. Who’s supposed to help them?

It’s a tough question, because assessing other people’s preferences from their behavior can be difficult even when they notionally like you. I was surprised, after the news of the extent of Appelbaum’s behavior broke, to learn that several acquaintances whom I had written off as either intentionally or unintentionally enabling him (in the end, it doesn’t really matter which) had actually been warning other people about him for longer than I had. How did I make this mistake?

Well, social cartography is hard. Suppose you’re at an event full of people you kinda-sorta know, and one person who you know is a sociopath. Supposing you decide to stick around, how do you tell who you can trust? Naïvely, anyone who’s obviously buddy-buddy with the sociopath is right out. But what about people who interact with the sociopath’s friends? During my brief and uneventful stint as an international fugitive, both friends and friends-of-friends of the pusbag who was funneling information about me to the prosecution were happy to dump information into that funnel. I learned the hard way that maintaining a cordon sanitaire around a bad actor requires at least two degrees of separation and possibly more. Paranoid? Maybe. But the information leakage stopped. (If I suddenly stopped talking to you sometime in 2009, consider whether you have a friend who is a narc.)

Consider, however, how this plays out in tightly connected groups, where the maximum degree of separation between any two people is, let’s say, three. Suppose that Mallory is a sociopath who has independently harmed both Alice and Bob. Suppose further that Alice and Bob are three degrees of separation from one another, and each has an acquaintance who is friends with Mallory. Let’s call those acquaintances Charlie and Diane, respectively.

Mallory, the sociopath, is one degree of separation away from each of Charlie and Diane, who are also one degree away from each other. Charlie’s friend Alice and Diane’s friend Bob are three degrees apart.

If Alice sees Diane and Mallory interacting, and then sees Diane and Bob interacting, the two-degrees-of-separation heuristic discourages Alice from interacting with Bob, since Bob appears to be a friend of a friend of Mallory. Likewise for Bob and Charlie in the equivalent scenario. How can Alice and Bob each find out that the other is also one of Mallory’s victims, and that they could help each other?

In business management, this kind of problem is known as an information silo, and it is a sociopath’s best friend. Lovecruft describes several of Appelbaum’s siloing techniques, such as threatening to smear anyone who spoke out against him as a closet fed. As recently leaked chat logs show, affiliation with intelligence agencies is a genuine hazard for some Tor contributors, which means that “fedjacketing” someone, or convincing others that they’re actually a fed, is an attack which can drive someone out of the community. (Side note: how the hell does urbandictionary.com not have an entry for fedjacketing yet? They have snitch jacket, with which Appelbaum has also threatened people.) But you don’t have to be someone for whom a fedjacketing would be career death for a sociopath to put you in an information silo. Fedjacketing is merely the infosec reductio ad absurdum of the reputational-damage siloing technique. If someone has made it clear to you that they’ll ruin your reputation — or any other part of your life — if you so much as breathe about how they treated you, that’s siloing. Pedophiles do it to children (“don’t tell your mom and dad, or they’ll put us both in jail”), cult leaders do it to their followers — anyone a sociopath can emotionally blackmail, s/he can isolate.

Discussing this with one acquaintance I had misread as an enabler, I asked: what should Alice and Bob do? “When in doubt, it might be a good idea to ask,” they suggested. But this presupposes that either Alice or Bob is insufficiently siloed as to make asking a viable option. My acquaintance also allowed that they had misread still other people for years simply due to not knowing that those people had cut ties with Appelbaum. I didn’t know my acquaintance’s preferences, nor they mine. My acquaintance didn’t know the other people’s preferences, nor vice versa. Because none of us expressed our preferences freely, we all falsified our preferences to one another without trying to, which I’m sure Appelbaum appreciated. People believed they had to play by the standard social rules, and that civility gave him room to maneuver. Once a sociopath achieves social centrality, concealed mistrust creates more information silos than the sociopath could ever create alone.

What else creates information silos? In some cases, the very people who are supposed to be in a position to break them down. Sociopaths don’t only target victims. They also target people in positions of authority, in order to groom them into enablers. This happened at Tor. The “open secret” was so open that, when Appelbaum didn’t show up to a biannual meeting in February and people put up a poster for others to write messages to him, someone wrote, “Thanks for a sexual-assault-free Tor meeting!” This infuriated one of the organizers, who had to be talked down from collecting handwriting samples to identify the writer. At an anonymity project, no less. Talk about not knowing your demographic.

This abandonment of the community’s core values speaks to just how far someone can be groomed away from their own core values. In part, this may have been due to Appelbaum’s dedication to conflating business and personal matters — playing off people’s unwillingness to overlap the two. When this tactic succeeds against a person in a position of organizational power, it incentivizes them to protect their so-called friend, to the overall detriment of the organization.

Friendship is all well and good, right up to the point when it becomes an excuse to abdicate a duty of care. You know, like the one a meeting organizer takes on with respect to every other attendee when they accept the responsibility of organization. If the organizer knew that his “friend” had serious boundary issues, why the hell didn’t he act to protect or at least warn people at the meetings Appelbaum did attend? As enablers, people in positions of authority are a force multiplier for sociopaths. Sociopaths love to recruit them as supporters, much the same as the way a middle-school Queen Bee puts on her most adorable face for the vice-principal. Why put in the effort to threaten victims when your pet authority figure will gladly do it for you? Co-opted authority figures turn preference falsification cascades into full-on waterfalls.

Scott Alexander muses:

I wonder if a good definition for “social cancer” might be any group that breaks the rules of cooperative behavior that bind society together in order to spread more quickly than it could legitimately achieve, and eventually take over the whole social body.

One cell in the body politic mutates, and starts to recruit others. Those recruited cells continue to perform the same functions they always have — building structures, transmitting signals, defending nearby cells — but now they do it in service of that mutant cell line. The tumor is the silo. If you find yourself breaking the rules — or, worse, your rules, your personal ethics — for someone on a regular basis, consider whether that charming friend of yours is inviting you to be part of their tumor.

How do you bust out of a sociopath’s information silo? Personally, I take my cues from Captain James Tiberius Kirk: when the rules are arrayed against you, break them. When a sociopath tries to leave you no “legitimate” maneuvers, Kobayashi Maru that shit as hard as you possibly can.

I also take cues from my husband. TQ has interacted with Appelbaum exactly twice. The first time, Appelbaum physically shoved him out of the way at my late husband Len’s wake in order to stage a dramatic fauxpology for plagiarizing me, Len, and Dan in 2008, begging to “put our differences aside.” (Protip: when someone later tries to shut you up about something they did to you because “we reconciled!”, it wasn’t a real apology in the first place.) The second time, Appelbaum walked up and sat on him.

Appelbaum behaves as if TQ were an object. Operationalizing people — understanding them as a function of what they can do, rather than who they are — is one thing. We’re autistic; we do it all the time. Operationalizing people without concern for their preferences or their bodily integrity is another thing entirely. Since then, with no concern whatsoever for social niceties, any time anyone has brought Appelbaum up in TQ’s presence, he asks, “Why are you giving the time of day to a sociopath?” It isn’t polite, but it sure does break the ice quickly.

Similarly, a few years ago, Appelbaum applied to speak at a conference TQ and I regularly attend. This conference is neither streamed nor recorded, and speakers are encouraged to present works in progress. The organizer contacted us, unsure how to handle the situation. TQ replied, “I would no more invite a plagiarist to an unfinished-work conference than I would a pedophile to a playground.” The organizer rejected Appelbaum, and the conference went on theft-free. That’s more than a lot of other conference organizers, some of whom knew better, can say.

The one thing that protects sociopaths the most is their victims’ unwillingness to speak up, because the one thing that can hurt a sociopath is having their extraction racket exposed for the fraud it really is. People fear social repercussions for standing up to the Rock Star or the Queen Bee, but consider: if someone is stupid, venal, or corrupt enough to be a sociopath’s enabler, why would you even want to give them any of your social capital in the first place? You might feel like you have to, for the sake of social harmony, or because the subcultural niche that the sociopath has invaded is important to you, or because it’s your workplace and you really need the job. Even sociopaths themselves can experience this pressure. On Quora, diagnosed sociopath Thomas Pierson explains:

Why do [sociopaths] lie and manipulate? Because people punish you when you tell them the truth.

Giving in to fear, to the detriment of those around you, is how you become the bad guy. Lies don’t really protect anyone. They only kick the can down the road, and the reckoning will only be worse when it eventually comes. Suppressing the truth out of fear of being punished is the same as paying the Danegeld out of fear of being overpowered. It’s a form of the sunk cost fallacy, and Kipling had the right of it:

And that is called paying the Dane-geld;

But we’ve proved it again and again,

That if once you have paid him the Dane-geld

You never get rid of the Dane.

Social capital isn’t some magical thing that some people have and others don’t. Like any other form of currency, the locus of its power is in its exchange. (Yeah, we really are all Keynesians now.) In the case of social exchanges, those currencies are information, attention, and affective empathy. Sociopaths try to keep their victims from having relationships the sociopath isn’t involved in, because those are the relationships the sociopath can’t control or collect rent on in the form of secrets or adulation. Building up those relationships — from finding other victims, all the way up to entire parallel social circles where known sociopaths are unwelcome and their enablers receive little to no interaction — incrementally debases the sociopath’s social currency, faster and faster as the graph expands.

Internalizing this grants you a superpower: the power of giving exactly zero fucks. It’s the same power of giving zero fucks that Paulette Perlhach writes about in The Story of a Fuck-Off Fund, only denominated in graph connectivity rather than dollars. It takes the same kind of effort, but it pays off in the same kind of reward. When you give no fucks and tell the truth about a sociopath, two things happen. First, people who have been hurt and haven’t found their superpower yet will come find you. Second, the sociopath starts flailing. (One benefit of being right is that the facts line up on your side.) As accounts of the sociopath’s misdeeds come out, the sociopath’s narrative has to become more and more convoluted in order to keep the fanboys believing. “They’re all feds!” he shrieks. “Every last one of them!”

Uh-huh. Sure. Because the feds always assign multiple agents not only to target one guy who can’t even keep his dick in his pants, but to become his coworkers, don’t they? This is not exactly an inexpensive proposition. Reality check: if the feds had wanted to pull a honeytrap (which there’d be no reason to do, given his mascot-only status at Tor), everything would have been a lot more cut-and-dried.

Threats that work well in a silo don’t necessarily work so well at scale.

Of course, an actual programmer would know that scaling is hard.

Tomorrow, we’ll explore why that is.

Too Late for the Pebbles to Vote Part 2

Previously, we discussed how sociopaths embed themselves into formerly healthy systems. Now let’s talk about what happens when those systems undergo self-organized criticality.

Consider a pile of sand. Trickle more sand onto it from above, and eventually it will undergo a phase transition: an avalanche will cascade down the pile.

As the sand piles up, the slope at different points on the surface of the pile grows steeper, until it passes the critical point at which the phase transition takes place. The trickle of sand, whatever its source, is what causes the dynamical system to evolve, driving the slope ever back up toward the critical point. Thanks to that property, the critical point is also an attractor. However, crucially, the overall order evident in the pile arises entirely from local interactions among grains of sand. Criticality events are thus self-organized.

Wars are self-organized criticality events. So are bank runs, epidemics, lynchings, black markets, riots, flash mobs, neuronal avalanches in your own brain’s neocortex, and evolution, as long as the metaphorical sand keeps pouring. Sure, some of these phenomena are beneficial — evolution definitely has a lot going for it — but they’re all unpredictable. Since humans are arguably eusocial, it stands to reason that frequent unpredictability in the social graphs we rely on to be human is profoundly disturbing. We don’t have a deterministic way to model this unpredictability, but wrapping your head around how it happens does make it a little less unsettling, and can point to ways to route around it.

A cellular automaton model, due to Bak, Tang, and Wiesenfeld, is the classic example of self-organized criticality. The grid of a cellular automaton is (usually) a directed graph where every vertex has out-degree 4 — each cell has four neighbors — but the model generalizes just fine to arbitrary directed graphs. You know, like social graphs.

Online social ties are weaker than meatspace ones, but this has the interesting side effect of making the online world “smaller”: on average, fewer degrees separate two arbitrary people on Facebook or Twitter than two arbitrary people offline. On social media, users choose whether to share messages from one to another, so any larger patterns in message-passing activity are self-organized. One such pattern, notable enough to have its own name, is the internet mob. The social graph self-reorganizes in the wake of an internet mob. That reorganization is a phase transition, as the low become high and the high become low. But the mob’s target’s social status and ties are not the only things that change. Ties also form and break between users participating in, defending against, or even just observing a mob as people follow and unfollow one another.

Some mobs form around an explicit demand, realistic or not — the Colbert Report was never in any serious danger of being cancelled — while others identify no extrinsic goals, only effects on the social graph itself. Crucially, however, both forms restructure the graph in some way.

This structural shift always comes with attrition costs. Some information flows break and may never reform. The side effects of these local interactions are personal, and their costs arise from the idiosyncratic utility functions of the individuals involved. Often this means that the costs are incomparable. Social media also brings the cost of engagement way down; as Justine Sacco discovered, these days it’s trivial to accuse someone from halfway around the planet. But it’s worse than that; even after a mob has become self-sustaining, more people continue to pile on, especially when messages traverse weak ties between distant groups and kick off all-new avalanches in new regions of the graph.

Members of the black group are strongly connected to other members of their group, and likewise for the dark gray and white groups. The groups are interconnected by weak, “long-distance” ties. Reproduced from The Science of Social 2 by Dr. Michael Wu.

Remember Conway’s law? All systems copy the communication structures that brought them into being. When those systems are made of humans, that communication structure is the social graph. This is where that low average degree of separation turns out to be a problem. By traversing weak ties, messages rapidly escape a user’s personal social sphere and propagate to ones that user will never intersect. Our intuitions prepare us for a social sphere of about a hundred and fifty people. Even if we’re intellectually aware that our actions online are potentially visible to millions of people, our reflex is still to act as if our messages only travel as far and wide as in the pre-social-media days.

This is a cognitive bias, and there’s a name for it: scope insensitivity. Like the rabbits in Watership Down, able to count “one, two, three, four, lots,” beyond a certain point we’re unable to appreciate orders of magnitude. Furthermore, weak long-distance ties don’t give us much visibility into the size of the strongly-tied subgraphs we’re tapping into. Tens of thousands of individual decisions to shame Justine Sacco ended in her being the #1 trending topic on Twitter — and what do you suppose her mentions looked like? Self-organized criticality, with Sacco at ground zero. Sure, #NotAllRageMobs reach the top of the trending list, but they don’t have to go that far to have significant psychological effect on their targets. (Sociologist Kenneth Westhues, who studies workplace mobbing, argues that “many insights from [the workplace mobbing] literature can be adapted mutatis mutandis to public mobbing in cyberspace,” and I agree.)

In the end, maybe the best we can hope for is user interfaces that encourage us to sensitize ourselves to the scope of our actions — that is to say, to understand just how large of a conversation we’re throwing our two cents into. Would people refrain from piling on to someone already being piled on if they knew just how big the pile already was? Well, maybe some would. Some might do it anyway, out of malice or out of virtue-signaling. As Robert Kegan and Lisa Laskow Lahey point out in Immunity to Change, for many people, their sense of self “coheres by its alignment with, and loyalty to, that with which it identifies.” Virtue signaling is one way people express that alignment and loyalty to groups they affiliate with, and these days it’s cheap to do that on social media. Put another way, the mobbings will continue until the perverse incentives improve. There’s not much any of us can individually do about that, apart from refraining from joining in on what appears to be a mob.

That’s a decision characteristic of what Kegan and Lahey call the “self-authoring mind,” contrasted with the above-mentioned “socialized mind,” shaped primarily “by the definitions and expectations of our personal environment.” Not to put too fine a point on it, over the last few years, my social media filter bubble has shifted considerably toward the space of people who independently came to a principled stance against participation in mobs. However, given that the functional programming community, normally a bastion of cool reason and good cheer, tore itself apart over a moral panic just a few months ago, it’s clear that no community is immune to flaming controversy. Self-organized criticality means that the call really is coming from inside the house.

Here’s the moral question that not everyone answers the same way I do, which has led to some restructuring in my region of the graph, a local phase transition: when is it right to throw a handful of sand on the pile?

Some people draw a bright line and say “never.” I respect that. It is a consistent system. It was, in fact, my position for quite some time, and I can easily see how that comes across as throwing down for Team Not Mobbing. But one of the implications of being a self-authoring system is that it’s possible to revisit positions at which one has previously arrived, and, if necessary, rewrite them.

So here’s the core of the conundrum. Suppose you know of some information that’s about to go public. Suppose you also expect, let’s say to 95% confidence, that this event will kick off a mob in your immediate social sphere. An avalanche is coming. Compared to it, you are a pebble. The ground underneath and around you will move whether you do anything or not. What do you do?

I am a preference consequentialist, and this is a consequentialist analysis. I won’t be surprised if how much a person agrees with it correlates with how much of a consequentialist they are. I present it mainly in the interest of braindumping the abstractions I use to model these kinds of situations, which is as much in the interest of information sharing as anything else. There will be mathematics.

I am what they call a “stubborn cuss” where I come from, and if my only choices are to jump or be pushed, my inclination is to jump. Tor fell down where organizational accountability was concerned, at first, and as Karen Reilly’s experience bears out, had been doing so for a while.

You don't just kick sociopaths down the road and play like you've done the necessary. That's extending their social license to operate.

— Meredith L. Patterson 💉💉 (@maradydd) June 3, 2016

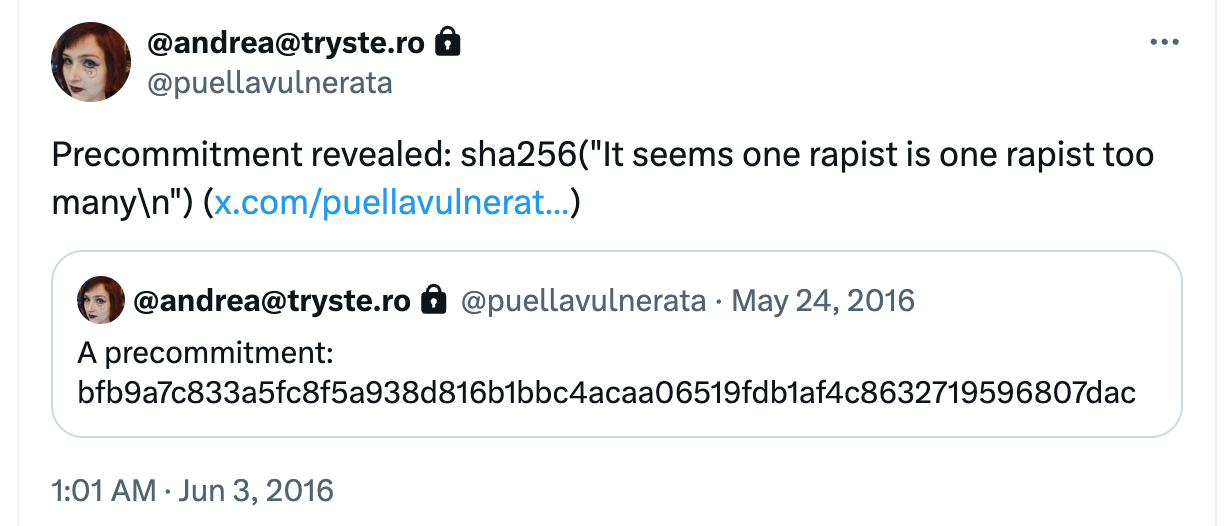

Tweets from the author @maradydd from June 3, 2016

So that’s the direction I jumped. To be perfectly honest, I still don’t have anything resembling a good sense of what the effects of my decision were versus those of anyone else who spoke up, for whatever reason, about the entire situation. Self-organized chaotic systems are confounding like that.

Tor had the chance to nip this in the bud back when Jake was just a plagiarist. They ignored it, and he graduated to sexual assault.

— Meredith L. Patterson 💉💉 (@maradydd) June 3, 2016

Tweets from the author @maradydd from June 3, 2016

If you observe them for long enough, though, patterns emerge. Westhues has been doing this since the mid-1990s. He remarks that “one way to grasp what academic mobbing is is to study what it is not,” and lists a series of cases. “Ganged up on or not,” he concludes of a professor who had falsified her credentials and been the target of student protests about the quality of her teaching, “she deserved to lose her job.” Appelbaum had already resigned before the mob broke out. Even if the mob did have an extrinsic demand, his resignation couldn’t have been it, because that was already over and done with.

Okay, but what about the intrinsic outcomes, the radical restructuring of the graph that ensued as the avalanche settled? Lovecruft has argued that removing abusers from opportunities to revictimize people is a necessary step in a process that may eventually lead to reconciliation. This is by definition a change in the shape of the social graph. Others counter that this is ostracism, and, well, that’s even true: that’s what it looks like when a whole lot of people decide to adopt a degrees-of-separation heuristic, or to play Exit, all at once.

Still others argue that allegations of wrongdoing should go before a criminal court rather than the court of public opinion. In general I agree with this, but when it comes to longstanding patterns of just-this-side-of-legally-actionable harm, criminal courts are useless. A bad actor who’s clever about repeatedly pushing ever closer to that line, or who crosses it but takes care not to leave evidence that would convince a jury beyond a reasonable doubt, is one who knows exactly what s/he’s doing and is gaming the system. When a person’s response to an allegation boils down to “no court will ever convict me,” as Tor volunteer Franklin Bynum pointed out, that sends a game-theoretically meaningful signal.

Signaling games are all about inference and credibility. From what a person says, what can you predict about what actions they’ll take? If a person makes a particular threat, how likely is it that they’ll be able to make good on it? “No court will ever convict me” is actually pretty credible when it comes to a pattern of boundary-violating behavior that, in many cases, indeed falls short of prosecutability. (Particularly coming from someone who trades on their charisma.) Courts don’t try patterns of behavior; they try individual cases. But when a pattern of boundary-pushing behavior is the problem, responding to public statements about that pattern with “you’ll never prove it” is itself an instance of the pattern. As signals go, to quite a few people, it was about the loudest “I’m about to defect!” Appelbaum could have possibly sent in a game where the players have memory.

Courts don’t try patterns of behavior, but organizations do. TQ and I once had an incredibly bizarre consulting gig (a compilers consulting gig, which just goes to show you that things can go completely pear-shaped in bloody any domain) that ended with one of the client’s investors asking us to audit the client’s code and give our professional opinion on whether the client had faked a particular demonstration. Out of professional courtesy, we did not inquire whether the investor had previously observed or had suspicions about inauthenticity on the client’s part. Meanwhile, however, the client was simultaneously emailing conflicting information to us, our business operations partner, and the investor — with whom I’d already been close friends for nearly a decade — trying to play us all off each other, as if we didn’t all have histories of interaction to draw on in our decision-making. “It’s like he thinks we’re all playing classical Prisoner’s Dilemma, while the four of us have been playing an iterated Stag Hunt for years already,” TQ observed.

Long story short (too late), the demo fell shy of outright fraud, but the client’s promises misrepresented what the code actually did to the point where the investor pulled out. We got a decent kill fee out of it, too, and a hell of a story to tell over beers. When money is on the line, patterns of behavior matter, and I infer from the investor’s action that there was one going on there. Not every act of fraud — or force, for that matter — rises to the level of criminality, but a pattern of repeated sub-actionable force or fraud is a pattern worth paying attention to. A pattern of sub-actionable force or fraud coupled with intimidation of people who try to address that pattern is a pattern of sociopathy. If you let a bad actor get away with “minor” violations, like plagiarism, you’re giving them license to expand that pattern into other, more flagrant disregard of other people’s personhood. “But we didn’t think he’d go so far as to rape people!” Of course you didn’t, because you were doing your level best not to think about it at all.

Investors have obvious strong incentives to detect net extractors of value accurately and quickly. Another organization with similarly strong incentives, believe it or not, is the military. Training a soldier isn’t cheap, which is why the recruitment and basic training process aims to identify people who aren’t going to acquire the physical and mental traits that soldiering requires and turn them back before their tenure entitles them to benefits. As everyone who’s been through basic can tell you, one blue falcon drags down the whole platoon. Even after recruits have become soldiers, though, the military still has strong incentives to identify and do something about serial defectors. Unit cohesion is a real phenomenon, for all the disagreement on how to define it, and one or a few people preying on the weaker members of a unit damages the structure of the organization. The military knows this, which is the reason its Equal Opportunity program exists: a set of regulations outlining a complaint protocol, and a cadre trained and detailed to handle complaints of discriminatory or harassing behavior. No, it’s not perfect, by any stretch of the imagination. The implementation of any human-driven process is only as rigorous as the people implementing it, and as we’ve already discussed, subverting human-driven processes for their own benefit is a skill at which sociopaths excel. However, like any military process, it’s broken down into bite-sized pieces for every step of the hierarchy. Some of them are even useful for non-hierarchical structures.

Fun fact: National Guard units have EO officers too, and I was one. Again and again during the training for that position, they hammer on the importance of documentation. We were instructed to impress that not just on people who bring complaints, but on the entire unit before anyone has anything to bring a complaint about. Human resources departments will tell you this too: document, document, document. This can be a difficult thing to keep track of when you’re stuck inside a sick system, a vortex of crisis and chaos that pretty accurately describes the internal climate at Tor over the last few years. And, well, the documentation suffered, that’s clear. But now there’s some evidence, fragmentary as it may be, of a pattern of consistent and unrepentant boundary violation, intimidation, bridge-burning, and self-aggrandizement.

Even when the individual acts that make up a pattern are calculated to skirt the boundaries of actionable behavior, military commanders have explicit leeway to respond to the pattern with actions up to and including court-martial, courtesy of the general article of the Uniform Code of Military Justice:

Though not specifically mentioned in this chapter, all disorders and neglects to the prejudice of good order and discipline in the armed forces, all conduct of a nature to bring discredit upon the armed forces, and crimes and offenses not capital, of which persons subject to this chapter may be guilty, shall be taken cognizance of by a general, special, or summary court-martial, according to the nature and degree of the offense, and shall be punished at the discretion of that court.

It’s the catch-all clause that Kink.com installed a bunch of new rules in lieu of, an exception funnel that exists because sometimes people decide that having one is better than the alternative. Realistically, any form of at-will employment implicitly carries this clause too. If a person can be fired for no reason whatsoever, they can certainly be fired for a pattern of behavior. Companies have this option; organizations that don’t maintain contractual relationships with their constituents face paths that are not so clear-cut, for better or for worse.

But I take my cues about exception handling, as I do with a surprisingly large number of other life lessons, from the Zen of Python:

Errors should never pass silently.

Unless explicitly silenced.

When a person’s behavior leaves a pattern of damage in the social fabric, that is an exception going silently unhandled. The whisper network did not prevent the damage that has occurred. It remains to be seen what effect the mob-driven documentation will have. Will it achieve the effect of warning others about a recurring source of error (I suppose nominative determinism wins yet again), or will the damaging side effects of the phase transition prove too overwhelming for some clusters of the graph to bear? Even other consequentialists and I might part ways here, because of that incomparability problem I mentioned earlier. I don’t really have a good answer to that, or to deontologists or virtue ethicists either. At the end of the day, I spoke up because of two things: 1) I knew that several of the allegations were true, and 2) if I jumped in front of the shitstorm and got my points out of the way, it would be far harder to dismiss as some nefarious SJW plot. Sometimes cross-partisanship actually matters.

I don’t expect to change anyone’s mind here, because people don’t develop their ethical principles in a vacuum. That said, however, situations like these are the ones that prompt people to re-examine their premises. Once you’re at the point of post-hoc analysis, you’re picking apart the problem of “how did this happen?” I’m more interested in “how do we keep this from continuing to happen, on a much broader scale?” The threat of mobs clearly isn’t enough. Nor would I expect it to be, because in the arms race between sociopaths and the organizations they prey on, sociopath strategies evolve to avoid unambiguous identification and thereby avoid angry eyes. “That guy fucked up, but I won’t be so sloppy,” observes the sociopath who’s just seen a mob take another sociopath down. Like any arms race, it is destined to end in mutually assured destruction. But as long as bad actors continue to drive the sick systems they create toward their critical points, there will be avalanches. Whether you call it spontaneous order or revolutionary spontaneity, self-organized criticality is a property of the system itself.

The only thing that can counteract self-organized aggregate behavior is different individual behavior that aggregates into a different emergent behavior. A sick system self-perpetuates until its constituents decide to stop constituting it, but just stopping a behavior doesn’t really help you if doing so leaves you vulnerable. As lousy of a defense as “hunker down and hope it all goes away soon” is over the long term, it’s a strategy, which for many people beats no strategy at all. It’s a strategy that increases the costs of coordination, which is a net negative to honest actors in the system. But turtling is a highly self-protective strategy, which poses a challenge: any proposed replacement strategy that lowers the cost of coordination among honest actors also must not be significantly less self-protective, for idiosyncratic, context-sensitive, and highly variable values of “significantly.”

I have some thoughts about this too. But they’ll have to wait till our final installment.